When Sora Stops Waiting for Your Ideas

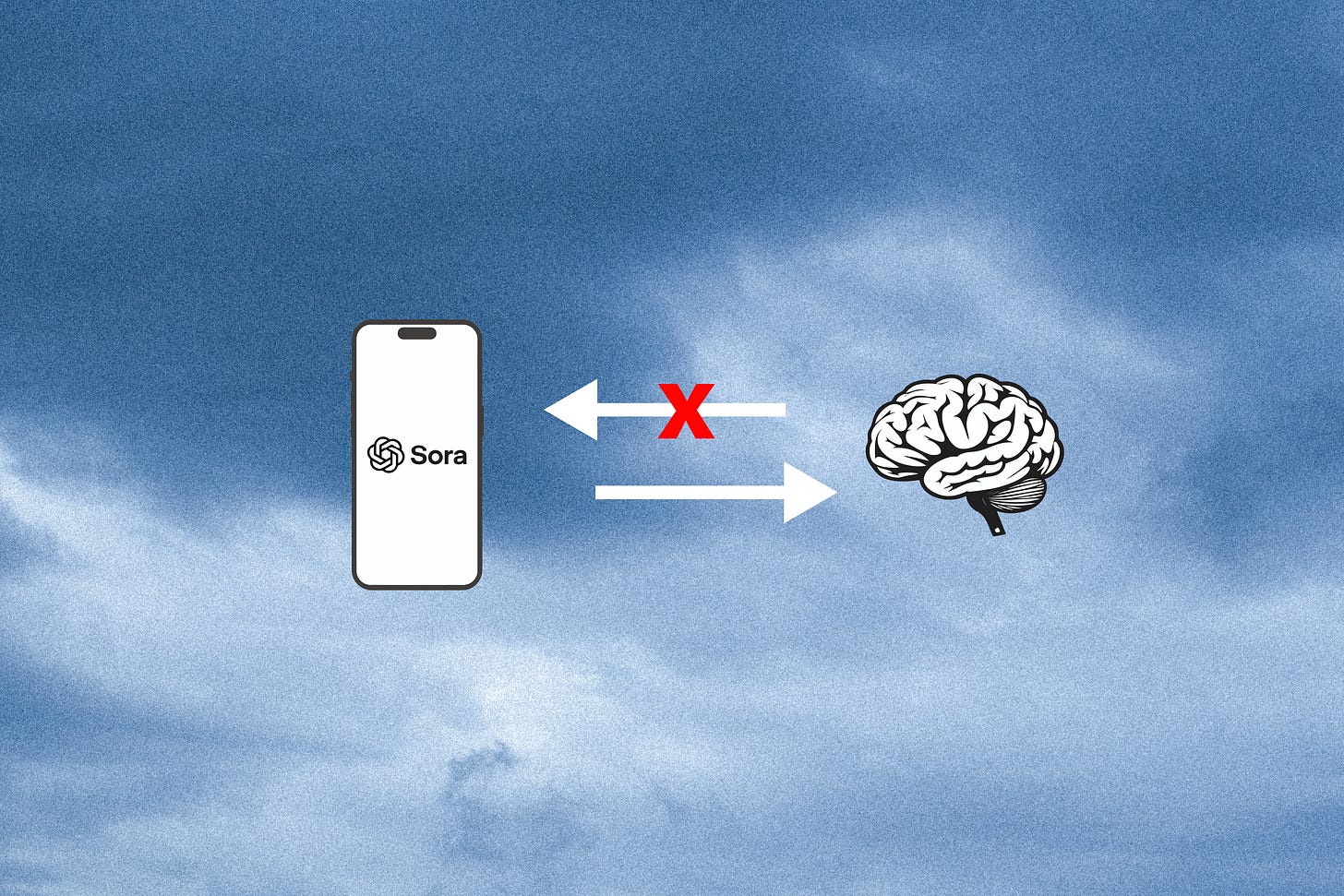

While most are debating deepfakes and copyright issues, the real danger is hiding in plain sight. AI companies will soon stop waiting for humans to have the ideas for malicious content.

On September 30, OpenAI released Sora, a TikTok-like endless feed of short-form AI video clips. Just a few days earlier, Meta launched Vibes, a new tab in the MetaAI app that is essentially the same format. These apps have been broadly criticized as AI-slop feeds full of disturbing copyright infringement and screensavers. Commentators have speculated that these new apps are cynical attempts at generating advertising revenue to make up for a disappointing lack of profitability elsewhere in the expensive AI model business. This point is bolstered by the addition of erotic text content to ChatGPT’s capabilities. Additionally, many have commented on how these apps bring the removal of friction to the process of creating an unauthorized deepfake of real people. I think this is all true, and pretty frightening. But I fear that something much more sinister is lurking in the near future.

Thus far, Sora and Vibes only feature content that has been “generated” by a human who had the idea to create something. In this way, the apps are not so much of a departure from regular old TikTok or Facebook save for the fact that everything made with a camera has been removed from the feeds, and the latest AI video models have been tightly integrated into the creation process. This makes it somewhat easy to imagine downstream societal consequences of the proliferation of these feeds onto people’s devices: misinformation on steroids, worsening mental health in children, and acceleration of declining literacy rates. These existing disasters are creeping through modern society as a result of social feed domination over Americans’ daily lives.

But one can imagine a near-future scenario where these companies begin to experiment with automatically prompted content; that is, the idea for the video originates from an AI model. Indeed, this scenario may be an inevitability within the current industry framework of efficiency maximalism. Humans generating video ideas is an inefficiency in the grand scheme of things, particularly as the AI companies themselves are gaining heretofore unthinkable quantities of personal data from their users. Since Sam Altman seems to have little interest in contemplating the results of his business decisions, let us contemplate what that likely future decision might mean for us.

The Algorithmic Ratchet

In 2016, sociologist Dr. Zeynep Tufekci noted the radicalizing effects of YouTube’s content recommendation system. When seeking out mainstream conservative content, she noticed recommendations pushing her toward white supremacist and Holocaust denial videos. She noted similar rabbit holes evolving when viewing left-wing and even non-political content. Her assessment at the time was that “it promotes, recommends and disseminates videos in a manner that appears to constantly up the stakes.”

These findings have been hotly debated in the years since. Many studies have corroborated Tufekci’s findings, while some have found limited polarizing effects. My sense is that although it is hard to replicate these exact mechanisms in controlled settings, there is some deep truth in the idea that we are all being molded in unknown ways by opaque algorithmic recommendations. We are swimming in the waters of recommended content every day. Americans might be spending a shocking eight hours a day on social media this year, the majority of which is increasingly comprised of algorithmic video viewing.

Suffice it to say that any radical changes to what makes up the algorithmically served video content would probably rock American society in profound ways. Recent commentary by Francis Fukuyama and others have naturally tied the rise in global populist politics to the sharp rise in social media usage. As Fukuyama notes, “there should be no reason why yoga moms should be drawn to QAnon and conspiratorial thinking.” But through widespread algorithmic ratcheting, we now have dozens of these odd associations between disparate affinity and cultural groups with previously fringe beliefs. Some even believe that this may have been the key element in Trump’s 2024 election victory.

These connections have simply emerged, sans human design, from the technology working its way through society. Although there have been some studies suggesting that China has the ability to moderate what videos are recommended through search, these known heating and hiding effects do not account for some of the stranger American phenomena that have come about. What bizarre situations might emerge once AI is not only mediating your video choices, but determining what videos exist in the first place? What happens when the algorithmic ratchet, in its relentless quest to “up the stakes” can’t find an existing video to serve you? Will these new systems simply show a less engaging video, as they do today? Or will they order up a new video idea to suck in the user?

The Pseudo-Meme

A meme in the classical, Richard Dawkins sense is defined as “an idea, behavior, or style that spreads by means of imitation from person to person within a culture and often carries symbolic meaning representing a particular phenomenon or theme.” Over the past ten years, we have witnessed the rise of social video content as a hyper-accelerant of meme proliferation.

Once upon a time in 2016, the precursor to TikTok was an upstart Chinese app called Musical.ly. At that time, the meme behavior was tweens making cringey lip-sync videos. To millennials, this seemed rather embarrassing, but the younger Gen Z’ers did not because the meme nature of the format made it feel acceptable among their cohort. In fairly short order, we saw TikTok become a dominant news consumption tool for young adults. We are now seeing social media news reach trust parity with national news sources. This total breakdown in the way Americans process the world around them has happened in around a decade, and trends are still heading in the wrong direction. The results are self-evident, but Francis Fukuyama’s piece spells it out better than I ever could.

Now imagine throwing autonomously generated AI videos, and cultural memes downstream of the videos, into the mix. Imagine that the companies driving the ship don’t even pretend to care about social outcomes resulting from their technology. Imagine that these companies are simultaneously propping up the global economy and showing no path to profitability other than nebulous ideas about supplanting TikTok with this new format. What pseudo-memes might emerge out of thin air, conjured into existence by a machine’s calculation of what new combination of ideas and behaviors might engage people? If millions are generated every day, how many might be socially harmful? How many of those might capture significant populations in the types of parallel realities that we already see today?

Imagine the system detects a user’s latent anxiety about their tap water (based on search history) and their distrust of officials (based on video watch time). It doesn’t find a QAnon video. Instead, it generates a 30-second, hyper-realistic clip of a local-looking “newscaster” (who doesn’t exist) reporting on a “leaked document” (that isn’t real) about a “cover-up” at the municipal water plant (that isn’t happening). This video is generated just for them and a few thousand others like them. How does a society function when its conspiracy theories are no longer theories, but high-definition, manufactured realities delivered on demand?

Arguments for government-enforced stoppages on AI development have not struck me as particularly sensical or realistic thus far. After all, AI development is generating massive economic advantages for the US currently, and this is likely to continue at least into the near future. But what economic or societal benefit will these AI video apps bestow on this country or the world? I cannot see any. Dire risks, however, are aplenty. Curtailing this technology isn’t just the responsible thing to do. It is an act of fundamental societal self-preservation.